How Neuronanotechnology Will Lead to Melding of Mind and Machine

Ray Kurzweil

Page 3 of 8

The evolution of an information backbone to biology, DNA, took billions of years, although actually RNA came first. And then evolution used DNA to evolve the next stage. It now had an information backbone which it’s used ever since, so the Cambrian explosion, when all the body plans of all the animals were evolved, took place 100 times faster, in 10 or 20 million years.

And then those body plans became a mature technology and evolution concentrated on higher cognitive functions and that only took millions of years. And then Homo sapiens evolved in only a few hundred thousand years.

There are only three simple but giant changes that distinguish us from our primate ancestors, involving only a few tens of thousand of bytes of information. One was a larger skull, at the expense of a weaker jaw, so don’t get into a biting contest with another primate. Another is our cerebral cortex so we can do “what if” experiments in our mind, abstract reasoning, such as if I took that stone and that stick and tie them together with that twine, I can actually extend the leverage of my arm.

And then we have opposable appendages that work so we could carry out these “what if” experiments and change the environment. It looked like a chimpanzee hand. That is similar but the pivot is down one inch, and basically it just doesn’t work very well. They don’t have a power grip, they don’t have a fine motor coordination, and they’re pretty clumsy if you watch them.

So we could effectively change the environment and create tools, and the first stage of that was a little bit faster. We took tens of thousands of years for fire, wheels, stone tools, but then we always use the latest generation of tools to create the next set of tools and so technology evolution has accelerated. And from the straight line on this logarithmic graph, technology evolution is emerging smoothly out of biological evolution. If we put this on a linear scale it looks like everything has just happened.

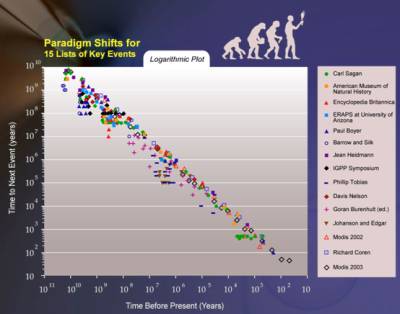

Image 10: Paradigm Shifts [Click for larger image]

Now some people said Kurzweil would only put points on this graph if they fit on a straight line. To address that I took 14 different lists from 14 different thinkers- Encyclopedia Britannica, American Museum of Natural History, Carl Sagan’s cosmic calendar, a dozen other lists. There can be disagreement between the lists: some people think the Cambrian explosion took 25 million years, some people include the ARPANET with the Internet, and there is disagreement when language started.

Therefore there’s some spreading of the points. But there’s a clear trend line, and clear acceleration. Nobody thinks the Internet took a million years to evolve, nobody thinks the Cambrian explosion happened in ten years. A billion years ago not much happened in a million years.

There’s a clear acceleration in this evolutionary process. And it’s to the point now where it’s very fast, and will continue to accelerate.

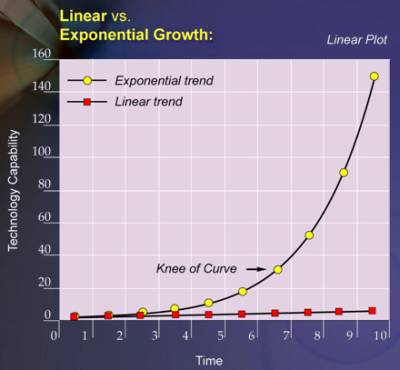

Image 11: Linear vs. Exponential Growth

If you compare an exponential to a linear progression, they look the same for a few years. Even if you go to the steep part of the exponential and take a small piece of it, it looks like a straight line. A straight line is a good approximation of an exponential for a short period of time. It’s a very bad approximation for a long period of time.

And I think we are hardwired to think in linear terms. I’ve had countless debates with scientists who take a lineal progression. For example, one was with a neuroscientist who had spent 18 months modeling one ion channel. He’s adding up all the other similar nonlinearities in other ion channels and said it would be 100 years.

He’s assuming that it’s going to take 18 months for every ion channel and every nonlinearity that 50 years from now there’s going to be no progress to computers, to scanners, or the ability to model these types of phenomena. He’s basically thinking in linear terms.

The ongoing exponential is made up of a series of S-curves. People say exponential growth can’t go on forever. Rabbits in Australia ate up all the foliage and then the exponential growth stops. And that’s true, any specific paradigm stops when it reaches the limit of that paradigm to provide exponential growth.

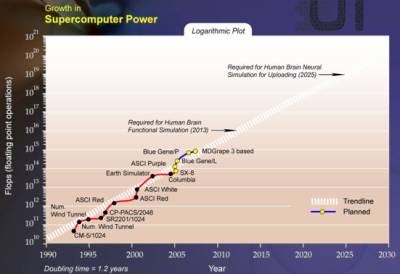

Image 12: Growth in Supercomputer Power [click for larger image]

What we find in information technology is it leads to research pressure that creates the next paradigm. I’ll show you in a moment how this happened five times in computers.

People say there must be some ultimate limit of matter and energy, based on what we know about physics, to support computation and communication, and yes, there are. In Chapter 3 I talk about that. There are ultimate limits but they’re not very limited. One cubic inch of nanotube circuitry would be 100 billion times more powerful than the estimate that I used for simulating the several hundred regions of the human brain, and that’s not even the ultimate limit.

So, there are limits but they’re not very limiting and we will be able to continue the double exponential growth in computation well into this century.

Information technology doubles its power, in terms of price performance, capacity, and bandwidth, every year. When I was at MIT in ’67 an eleven million dollar computer took up about five times the size of this room. It was shared by thousands of students and was about one thousand times less powerful than the computer in your cell phone today.

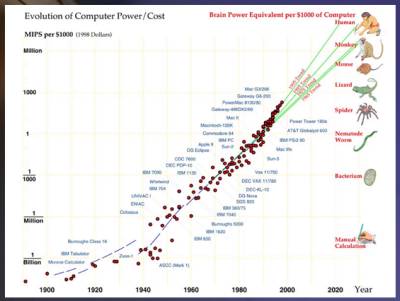

Image 13: Computer Power/Cost [click for larger image]

If we look at this chart of over a century of computing, the data processing equipment used in the 1890 census is in the lower left hand corner. Then relays were used to crack the German Enigma code. There’s a lot of interesting literature about how the English had to either ignore that information or convince the Germans that they didn’t get it through breaking the code but got it from some other way

But they used it without reservation in the Battle of Britain. It enabled the outnumbered RAF to win that battle. In the ’50s, they were using vacuum tube based computers. CBS predicted the election of Eisenhower, the first time the networks did that.

They were shrinking vacuums every year, making them smaller and smaller to keep the exponential growth going, and finally, that hit a wall, and that was the end of the shrinking of vacuum tubes. It was not the end of exponential growth of computing. We then went on to transistors, which are not small tubes; it’s a whole different approach. Then we’ve had several decades of integrated circuits and Moore’s Law [1], which basically states that the size of transistors on an integrated circuit is shrinking. You can put twice as many in every two years, and they run faster.

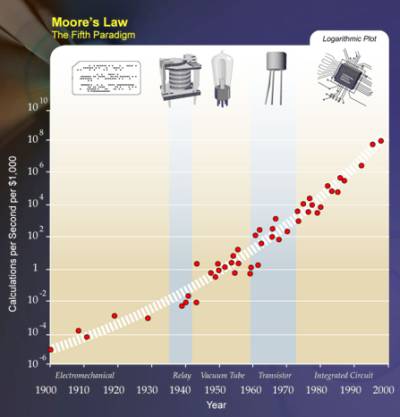

Image 14: Moore's Law

And the end of Moore’s law has been predicted for some time; the first predictions were for 2002. Predictions now are for 2022. There’s an ITRS roadmap, it’s a thick document that’s existed for years in the semiconductor industry with tremendous detail of every aspect of every kind of chip, going out fifteen to twenty years in the future; it runs now to 2020. By that time chips will have four nanometer features, twenty carbon atoms, and this has been followed very rigorously for several decades. But by that time, conventional two dimensional chips will be able to establish enough computation to simulate all regions of the brain, and I’ll get to that in a moment, for one thousand dollars, based on conventional chips.

Footnote

1. Moore’s Law - describes an important trend in the history of computer hardware: that the

number of transistors that can be inexpensively placed on an integrated circuit is

increasing exponentially, doubling approximately every two years.

http://en.wikipedia.org/wiki/Moore's_law November 13, 2007 3:41PM EST